feat: merge develop (#123)

* Support hybrid vector retrieval * Enable figures and table reading in Azure DI * Retrieve with multi-modal * Fix mixing up table * Add txt loader * Add Anthropic Chat * Raising error when retrieving help file * Allow same filename for different people if private is True * Allow declaring extra LLM vendors * Show chunks on the File page * Allow elasticsearch to get more docs * Fix Cohere response (#86) * Fix Cohere response * Remove Adobe pdfservice from dependency kotaemon doesn't rely more pdfservice for its core functionality, and pdfservice uses very out-dated dependency that causes conflict. --------- Co-authored-by: trducng <trungduc1992@gmail.com> * Add confidence score (#87) * Save question answering data as a log file * Save the original information besides the rewritten info * Export Cohere relevance score as confidence score * Fix style check * Upgrade the confidence score appearance (#90) * Highlight the relevance score * Round relevance score. Get key from config instead of env * Cohere return all scores * Display relevance score for image * Remove columns and rows in Excel loader which contains all NaN (#91) * remove columns and rows which contains all NaN * back to multiple joiner options * Fix style --------- Co-authored-by: linhnguyen-cinnamon <cinmc0019@CINMC0019-LinhNguyen.local> Co-authored-by: trducng <trungduc1992@gmail.com> * Track retriever state * Bump llama-index version 0.10 * feat/save-azuredi-mhtml-to-markdown (#93) * feat/save-azuredi-mhtml-to-markdown * fix: replace os.path to pathlib change theflow.settings * refactor: base on pre-commit * chore: move the func of saving content markdown above removed_spans --------- Co-authored-by: jacky0218 <jacky0218@github.com> * fix: losing first chunk (#94) * fix: losing first chunk. * fix: update the method of preventing losing chunks --------- Co-authored-by: jacky0218 <jacky0218@github.com> * fix: adding the base64 image in markdown (#95) * feat: more chunk info on UI * fix: error when reindexing files * refactor: allow more information exception trace when using gpt4v * feat: add excel reader that treats each worksheet as a document * Persist loader information when indexing file * feat: allow hiding unneeded setting panels * feat: allow specific timezone when creating conversation * feat: add more confidence score (#96) * Allow a list of rerankers * Export llm reranking score instead of filter with boolean * Get logprobs from LLMs * Rename cohere reranking score * Call 2 rerankers at once * Run QA pipeline for each chunk to get qa_score * Display more relevance scores * Define another LLMScoring instead of editing the original one * Export logprobs instead of probs * Call LLMScoring * Get qa_score only in the final answer * feat: replace text length with token in file list * ui: show index name instead of id in the settings * feat(ai): restrict the vision temperature * fix(ui): remove the misleading message about non-retrieved evidences * feat(ui): show the reasoning name and description in the reasoning setting page * feat(ui): show version on the main windows * feat(ui): show default llm name in the setting page * fix(conf): append the result of doc in llm_scoring (#97) * fix: constraint maximum number of images * feat(ui): allow filter file by name in file list page * Fix exceeding token length error for OpenAI embeddings by chunking then averaging (#99) * Average embeddings in case the text exceeds max size * Add docstring * fix: Allow empty string when calling embedding * fix: update trulens LLM ranking score for retrieval confidence, improve citation (#98) * Round when displaying not by default * Add LLMTrulens reranking model * Use llmtrulensscoring in pipeline * fix: update UI display for trulen score --------- Co-authored-by: taprosoft <tadashi@cinnamon.is> * feat: add question decomposition & few-shot rewrite pipeline (#89) * Create few-shot query-rewriting. Run and display the result in info_panel * Fix style check * Put the functions to separate modules * Add zero-shot question decomposition * Fix fewshot rewriting * Add default few-shot examples * Fix decompose question * Fix importing rewriting pipelines * fix: update decompose logic in fullQA pipeline --------- Co-authored-by: taprosoft <tadashi@cinnamon.is> * fix: add encoding utf-8 when save temporal markdown in vectorIndex (#101) * fix: improve retrieval pipeline and relevant score display (#102) * fix: improve retrieval pipeline by extending first round top_k with multiplier * fix: minor fix * feat: improve UI default settings and add quick switch option for pipeline * fix: improve agent logics (#103) * fix: improve agent progres display * fix: update retrieval logic * fix: UI display * fix: less verbose debug log * feat: add warning message for low confidence * fix: LLM scoring enabled by default * fix: minor update logics * fix: hotfix image citation * feat: update docx loader for handle merged table cells + handle zip file upload (#104) * feat: update docx loader for handle merged table cells * feat: handle zip file * refactor: pre-commit * fix: escape text in download UI * feat: optimize vector store query db (#105) * feat: optimize vector store query db * feat: add file_id to chroma metadatas * feat: remove unnecessary logs and update migrate script * feat: iterate through file index * fix: remove unused code --------- Co-authored-by: taprosoft <tadashi@cinnamon.is> * fix: add openai embedidng exponential back-off * fix: update import download_loader * refactor: codespell * fix: update some default settings * fix: update installation instruction * fix: default chunk length in simple QA * feat: add share converstation feature and enable retrieval history (#108) * feat: add share converstation feature and enable retrieval history * fix: update share conversation UI --------- Co-authored-by: taprosoft <tadashi@cinnamon.is> * fix: allow exponential backoff for failed OCR call (#109) * fix: update default prompt when no retrieval is used * fix: create embedding for long image chunks * fix: add exception handling for additional table retriever * fix: clean conversation & file selection UI * fix: elastic search with empty doc_ids * feat: add thumbnail PDF reader for quick multimodal QA * feat: add thumbnail handling logic in indexing * fix: UI text update * fix: PDF thumb loader page number logic * feat: add quick indexing pipeline and update UI * feat: add conv name suggestion * fix: minor UI change * feat: citation in thread * fix: add conv name suggestion in regen * chore: add assets for usage doc * chore: update usage doc * feat: pdf viewer (#110) * feat: update pdfviewer * feat: update missing files * fix: update rendering logic of infor panel * fix: improve thumbnail retrieval logic * fix: update PDF evidence rendering logic * fix: remove pdfjs built dist * fix: reduce thumbnail evidence count * chore: update gitignore * fix: add js event on chat msg select * fix: update css for viewer * fix: add env var for PDFJS prebuilt * fix: move language setting to reasoning utils --------- Co-authored-by: phv2312 <kat87yb@gmail.com> Co-authored-by: trducng <trungduc1992@gmail.com> * feat: graph rag (#116) * fix: reload server when add/delete index * fix: rework indexing pipeline to be able to disable vectorstore and splitter if needed * feat: add graphRAG index with plot view * fix: update requirement for graphRAG and lighten unnecessary packages * feat: add knowledge network index (#118) * feat: add Knowledge Network index * fix: update reader mode setting for knet * fix: update init knet * fix: update collection name to index pipeline * fix: missing req --------- Co-authored-by: jeff52415 <jeff.yang@cinnamon.is> * fix: update info panel return for graphrag * fix: retriever setting graphrag * feat: local llm settings (#122) * feat: expose context length as reasoning setting to better fit local models * fix: update context length setting for agents * fix: rework threadpool llm call * fix: fix improve indexing logic * fix: fix improve UI * feat: add lancedb * fix: improve lancedb logic * feat: add lancedb vectorstore * fix: lighten requirement * fix: improve lanceDB vs * fix: improve UI * fix: openai retry * fix: update reqs * fix: update launch command * feat: update Dockerfile * feat: add plot history * fix: update default config * fix: remove verbose print * fix: update default setting * fix: update gradio plot return * fix: default gradio tmp * fix: improve lancedb docstore * fix: fix question decompose pipeline * feat: add multimodal reader in UI * fix: udpate docs * fix: update default settings & docker build * fix: update app startup * chore: update documentation * chore: update README * chore: update README --------- Co-authored-by: trducng <trungduc1992@gmail.com> * chore: update README * chore: update README --------- Co-authored-by: trducng <trungduc1992@gmail.com> Co-authored-by: cin-ace <ace@cinnamon.is> Co-authored-by: Linh Nguyen <70562198+linhnguyen-cinnamon@users.noreply.github.com> Co-authored-by: linhnguyen-cinnamon <cinmc0019@CINMC0019-LinhNguyen.local> Co-authored-by: cin-jacky <101088014+jacky0218@users.noreply.github.com> Co-authored-by: jacky0218 <jacky0218@github.com> Co-authored-by: kan_cin <kan@cinnamon.is> Co-authored-by: phv2312 <kat87yb@gmail.com> Co-authored-by: jeff52415 <jeff.yang@cinnamon.is>

13

.dockerignore

Normal file

|

|

@ -0,0 +1,13 @@

|

|||

.github/

|

||||

.git/

|

||||

.mypy_cache/

|

||||

__pycache__/

|

||||

ktem_app_data/

|

||||

env/

|

||||

.pre-commit-config.yaml

|

||||

.commitlintrc

|

||||

.gitignore

|

||||

.gitattributes

|

||||

README.md

|

||||

*.zip

|

||||

*.sh

|

||||

25

.env

|

|

@ -1,8 +1,8 @@

|

|||

# settings for OpenAI

|

||||

OPENAI_API_BASE=https://api.openai.com/v1

|

||||

OPENAI_API_KEY=

|

||||

OPENAI_CHAT_MODEL=gpt-3.5-turbo

|

||||

OPENAI_EMBEDDINGS_MODEL=text-embedding-ada-002

|

||||

OPENAI_API_KEY=openai_key

|

||||

OPENAI_CHAT_MODEL=gpt-4o

|

||||

OPENAI_EMBEDDINGS_MODEL=text-embedding-3-small

|

||||

|

||||

# settings for Azure OpenAI

|

||||

AZURE_OPENAI_ENDPOINT=

|

||||

|

|

@ -15,4 +15,21 @@ AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002

|

|||

COHERE_API_KEY=

|

||||

|

||||

# settings for local models

|

||||

LOCAL_MODEL=

|

||||

LOCAL_MODEL=llama3.1:8b

|

||||

LOCAL_MODEL_EMBEDDINGS=nomic-embed-text

|

||||

|

||||

# settings for GraphRAG

|

||||

GRAPHRAG_API_KEY=openai_key

|

||||

GRAPHRAG_LLM_MODEL=gpt-4o-mini

|

||||

GRAPHRAG_EMBEDDING_MODEL=text-embedding-3-small

|

||||

|

||||

# settings for Azure DI

|

||||

AZURE_DI_ENDPOINT=

|

||||

AZURE_DI_CREDENTIAL=

|

||||

|

||||

# settings for Adobe API

|

||||

PDF_SERVICES_CLIENT_ID=

|

||||

PDF_SERVICES_CLIENT_SECRET=

|

||||

|

||||

# settings for PDF.js

|

||||

PDFJS_VERSION_DIST="pdfjs-4.0.379-dist"

|

||||

|

|

|

|||

1

.gitignore

vendored

|

|

@ -471,3 +471,4 @@ doc_env/

|

|||

|

||||

# application data

|

||||

ktem_app_data/

|

||||

gradio_tmp/

|

||||

|

|

|

|||

37

Dockerfile

Normal file

|

|

@ -0,0 +1,37 @@

|

|||

# syntax=docker/dockerfile:1.0.0-experimental

|

||||

FROM python:3.10-slim as base_image

|

||||

|

||||

# for additional file parsers

|

||||

|

||||

# tesseract-ocr \

|

||||

# tesseract-ocr-jpn \

|

||||

# libsm6 \

|

||||

# libxext6 \

|

||||

# ffmpeg \

|

||||

|

||||

RUN apt update -qqy \

|

||||

&& apt install -y \

|

||||

ssh git \

|

||||

gcc g++ \

|

||||

poppler-utils \

|

||||

libpoppler-dev \

|

||||

&& \

|

||||

apt-get clean && \

|

||||

apt-get autoremove

|

||||

|

||||

ENV PYTHONDONTWRITEBYTECODE=1

|

||||

ENV PYTHONUNBUFFERED=1

|

||||

ENV PYTHONIOENCODING=UTF-8

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

|

||||

FROM base_image as dev

|

||||

|

||||

COPY . /app

|

||||

RUN --mount=type=ssh pip install -e "libs/kotaemon[all]"

|

||||

RUN --mount=type=ssh pip install -e "libs/ktem"

|

||||

RUN pip install graphrag future

|

||||

RUN pip install "pdfservices-sdk@git+https://github.com/niallcm/pdfservices-python-sdk.git@bump-and-unfreeze-requirements"

|

||||

|

||||

ENTRYPOINT ["gradio", "app.py"]

|

||||

206

README.md

|

|

@ -1,12 +1,12 @@

|

|||

# kotaemon

|

||||

|

||||

An open-source tool for chatting with your documents. Built with both end users and

|

||||

An open-source clean & customizable RAG UI for chatting with your documents. Built with both end users and

|

||||

developers in mind.

|

||||

|

||||

https://github.com/Cinnamon/kotaemon/assets/25688648/815ecf68-3a02-4914-a0dd-3f8ec7e75cd9

|

||||

|

||||

|

||||

[Source Code](https://github.com/Cinnamon/kotaemon) |

|

||||

[Live Demo](https://huggingface.co/spaces/cin-model/kotaemon-public)

|

||||

[Live Demo](https://huggingface.co/spaces/taprosoft/kotaemon) |

|

||||

[Source Code](https://github.com/Cinnamon/kotaemon)

|

||||

|

||||

[User Guide](https://cinnamon.github.io/kotaemon/) |

|

||||

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

||||

|

|

@ -14,20 +14,23 @@ https://github.com/Cinnamon/kotaemon/assets/25688648/815ecf68-3a02-4914-a0dd-3f8

|

|||

|

||||

[](https://www.python.org/downloads/release/python-31013/)

|

||||

[](https://github.com/psf/black)

|

||||

<a href="https://hub.docker.com/r/taprosoft/kotaemon" target="_blank">

|

||||

<img src="https://img.shields.io/badge/docker_pull-kotaemon:v1.0-brightgreen" alt="docker pull taprosoft/kotaemon:v1.0"></a>

|

||||

[](https://codeium.com)

|

||||

|

||||

This project would like to appeal to both end users who want to do QA on their

|

||||

documents and developers who want to build their own QA pipeline.

|

||||

## Introduction

|

||||

|

||||

This project serves as a functional RAG UI for both end users who want to do QA on their

|

||||

documents and developers who want to build their own RAG pipeline.

|

||||

|

||||

- For end users:

|

||||

- A local Question Answering UI for RAG-based QA.

|

||||

- A clean & minimalistic UI for RAG-based QA.

|

||||

- Supports LLM API providers (OpenAI, AzureOpenAI, Cohere, etc) and local LLMs

|

||||

(currently only GGUF format is supported via `llama-cpp-python`).

|

||||

- Easy installation scripts, no environment setup required.

|

||||

(via `ollama` and `llama-cpp-python`).

|

||||

- Easy installation scripts.

|

||||

- For developers:

|

||||

- A framework for building your own RAG-based QA pipeline.

|

||||

- See your RAG pipeline in action with the provided UI (built with Gradio).

|

||||

- Share your pipeline so that others can use it.

|

||||

- A framework for building your own RAG-based document QA pipeline.

|

||||

- Customize and see your RAG pipeline in action with the provided UI (built with Gradio).

|

||||

|

||||

```yml

|

||||

+----------------------------------------------------------------------------+

|

||||

|

|

@ -45,78 +48,128 @@ documents and developers who want to build their own QA pipeline.

|

|||

```

|

||||

|

||||

This repository is under active development. Feedback, issues, and PRs are highly

|

||||

appreciated. Your input is valuable as it helps us persuade our business guys to support

|

||||

open source.

|

||||

appreciated.

|

||||

|

||||

## Key Features

|

||||

|

||||

- **Host your own document QA (RAG) web-UI**. Support multi-user login, organize your files in private / public collections, collaborate and share your favorite chat with others.

|

||||

|

||||

- **Organize your LLM & Embedding models**. Support both local LLMs & popular API providers (OpenAI, Azure, Ollama, Groq).

|

||||

|

||||

- **Hybrid RAG pipeline**. Sane default RAG pipeline with hybrid (full-text & vector) retriever + re-ranking to ensure best retrieval quality.

|

||||

|

||||

- **Multi-modal QA support**. Perform Question Answering on multiple documents with figures & tables support. Support multi-modal document parsing (selectable options on UI).

|

||||

|

||||

- **Advance citations with document preview**. By default the system will provide detailed citations to ensure the correctness of LLM answers. View your citations (incl. relevant score) directly in the _in-browser PDF viewer_ with highlights. Warning when retrieval pipeline return low relevant articles.

|

||||

|

||||

- **Support complex reasoning methods**. Use question decomposition to answer your complex / multi-hop question. Support agent-based reasoning with ReAct, ReWOO and other agents.

|

||||

|

||||

- **Configurable settings UI**. You can adjust most important aspects of retrieval & generation process on the UI (incl. prompts).

|

||||

|

||||

- **Extensible**. Being built on Gradio, you are free to customize / add any UI elements as you like. Also, we aim to support multiple strategies for document indexing & retrieval. `GraphRAG` indexing pipeline is provided as an example.

|

||||

|

||||

|

||||

|

||||

## Installation

|

||||

|

||||

### For end users

|

||||

|

||||

This document is intended for developers. If you just want to install and use the app as

|

||||

it, please follow the [User Guide](https://cinnamon.github.io/kotaemon/).

|

||||

it is, please follow the non-technical [User Guide](https://cinnamon.github.io/kotaemon/) (WIP).

|

||||

|

||||

### For developers

|

||||

|

||||

```shell

|

||||

# Create a environment

|

||||

python -m venv kotaemon-env

|

||||

#### With Docker (recommended)

|

||||

|

||||

# Activate the environment

|

||||

source kotaemon-env/bin/activate

|

||||

- Use this command to launch the server

|

||||

|

||||

# Install the package

|

||||

pip install git+https://github.com/Cinnamon/kotaemon.git

|

||||

```

|

||||

docker run \

|

||||

-e GRADIO_SERVER_NAME=0.0.0.0 \

|

||||

-e GRADIO_SERVER_PORT=7860 \

|

||||

-p 7860:7860 -it --rm \

|

||||

taprosoft/kotaemon:v1.0

|

||||

```

|

||||

|

||||

### For Contributors

|

||||

Navigate to `http://localhost:7860/` to access the web UI.

|

||||

|

||||

#### Without Docker

|

||||

|

||||

- Clone and install required packages on a fresh python environment.

|

||||

|

||||

```shell

|

||||

# Clone the repo

|

||||

git clone git@github.com:Cinnamon/kotaemon.git

|

||||

# optional (setup env)

|

||||

conda create -n kotaemon python=3.10

|

||||

conda activate kotaemon

|

||||

|

||||

# Create a environment

|

||||

python -m venv kotaemon-env

|

||||

|

||||

# Activate the environment

|

||||

source kotaemon-env/bin/activate

|

||||

# clone this repo

|

||||

git clone https://github.com/Cinnamon/kotaemon

|

||||

cd kotaemon

|

||||

|

||||

# Install the package in editable mode

|

||||

pip install -e "libs/kotaemon[all]"

|

||||

pip install -e "libs/ktem"

|

||||

pip install -e "."

|

||||

|

||||

# Setup pre-commit

|

||||

pre-commit install

|

||||

```

|

||||

|

||||

## Creating your application

|

||||

- View and edit your environment variables (API keys, end-points) in `.env`.

|

||||

|

||||

In order to create your own application, you need to prepare these files:

|

||||

- (Optional) To enable in-browser PDF_JS viewer, download [PDF_JS_DIST](https://github.com/mozilla/pdf.js/releases/download/v4.0.379/pdfjs-4.0.379-dist.zip) and extract it to `libs/ktem/ktem/assets/prebuilt`

|

||||

|

||||

<img src="docs/images/pdf-viewer-setup.png" alt="pdf-setup" width="300">

|

||||

|

||||

- Start the web server:

|

||||

|

||||

```shell

|

||||

python app.py

|

||||

```

|

||||

|

||||

The app will be automatically launched in your browser.

|

||||

|

||||

Default username / password are: `admin` / `admin`. You can setup additional users directly on the UI.

|

||||

|

||||

|

||||

|

||||

## Customize your application

|

||||

|

||||

By default, all application data are stored in `./ktem_app_data` folder. You can backup or copy this folder to move your installation to a new machine.

|

||||

|

||||

For advance users or specific use-cases, you can customize those files:

|

||||

|

||||

- `flowsettings.py`

|

||||

- `app.py`

|

||||

- `.env` (Optional)

|

||||

- `.env`

|

||||

|

||||

### `flowsettings.py`

|

||||

|

||||

This file contains the configuration of your application. You can use the example

|

||||

[here](https://github.com/Cinnamon/kotaemon/blob/main/libs/ktem/flowsettings.py) as the

|

||||

[here](flowsettings.py) as the

|

||||

starting point.

|

||||

|

||||

### `app.py`

|

||||

<details>

|

||||

|

||||

This file is where you create your Gradio app object. This can be as simple as:

|

||||

<summary>Notable settings</summary>

|

||||

|

||||

```python

|

||||

from ktem.main import App

|

||||

```

|

||||

# setup your preferred document store (with full-text search capabilities)

|

||||

KH_DOCSTORE=(Elasticsearch | LanceDB | SimpleFileDocumentStore)

|

||||

|

||||

app = App()

|

||||

demo = app.make()

|

||||

demo.launch()

|

||||

# setup your preferred vectorstore (for vector-based search)

|

||||

KH_VECTORSTORE=(ChromaDB | LanceDB

|

||||

|

||||

# Enable / disable multimodal QA

|

||||

KH_REASONINGS_USE_MULTIMODAL=True

|

||||

|

||||

# Setup your new reasoning pipeline or modify existing one.

|

||||

KH_REASONINGS = [

|

||||

"ktem.reasoning.simple.FullQAPipeline",

|

||||

"ktem.reasoning.simple.FullDecomposeQAPipeline",

|

||||

"ktem.reasoning.react.ReactAgentPipeline",

|

||||

"ktem.reasoning.rewoo.RewooAgentPipeline",

|

||||

]

|

||||

)

|

||||

```

|

||||

|

||||

### `.env` (Optional)

|

||||

</details>

|

||||

|

||||

### `.env`

|

||||

|

||||

This file provides another way to configure your models and credentials.

|

||||

|

||||

|

|

@ -159,18 +212,22 @@ AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002

|

|||

|

||||

#### Local models

|

||||

|

||||

- Pros:

|

||||

- Privacy. Your documents will be stored and process locally.

|

||||

- Choices. There are a wide range of LLMs in terms of size, domain, language to choose

|

||||

from.

|

||||

- Cost. It's free.

|

||||

- Cons:

|

||||

- Quality. Local models are much smaller and thus have lower generative quality than

|

||||

paid APIs.

|

||||

- Speed. Local models are deployed using your machine so the processing speed is

|

||||

limited by your hardware.

|

||||

##### Using ollama OpenAI compatible server

|

||||

|

||||

##### Find and download a LLM

|

||||

Install [ollama](https://github.com/ollama/ollama) and start the application.

|

||||

|

||||

Pull your model (e.g):

|

||||

|

||||

```

|

||||

ollama pull llama3.1:8b

|

||||

ollama pull nomic-embed-text

|

||||

```

|

||||

|

||||

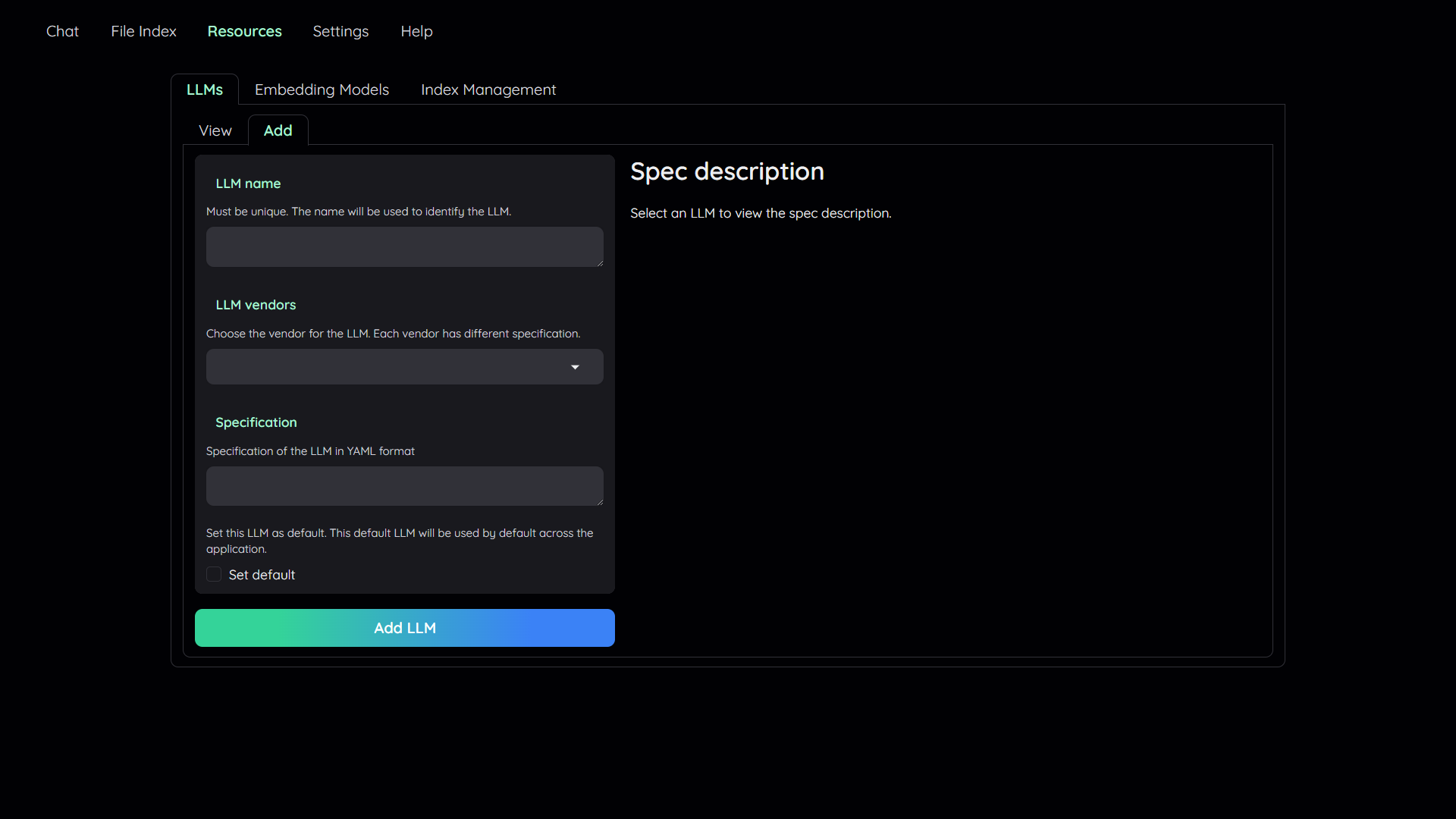

Set the model names on web UI and make it as default.

|

||||

|

||||

|

||||

|

||||

##### Using GGUF with llama-cpp-python

|

||||

|

||||

You can search and download a LLM to be ran locally from the [Hugging Face

|

||||

Hub](https://huggingface.co/models). Currently, these model formats are supported:

|

||||

|

|

@ -187,33 +244,26 @@ Here are some recommendations and their size in memory:

|

|||

- [Qwen1.5-1.8B-Chat-GGUF](https://huggingface.co/Qwen/Qwen1.5-1.8B-Chat-GGUF/resolve/main/qwen1_5-1_8b-chat-q8_0.gguf?download=true):

|

||||

around 2 GB

|

||||

|

||||

##### Enable local models

|

||||

Add a new LlamaCpp model with the provided model name on the web uI.

|

||||

|

||||

To add a local model to the model pool, set the `LOCAL_MODEL` variable in the `.env`

|

||||

file to the path of the model file.

|

||||

|

||||

```shell

|

||||

LOCAL_MODEL=<full path to your model file>

|

||||

```

|

||||

|

||||

Here is how to get the full path of your model file:

|

||||

|

||||

- On Windows 11: right click the file and select `Copy as Path`.

|

||||

</details>

|

||||

|

||||

## Start your application

|

||||

## Adding your own RAG pipeline

|

||||

|

||||

Simply run the following command:

|

||||

#### Custom reasoning pipeline

|

||||

|

||||

```shell

|

||||

python app.py

|

||||

```

|

||||

First, check the default pipeline implementation in

|

||||

[here](libs/ktem/ktem/reasoning/simple.py). You can make quick adjustment to how the default QA pipeline work.

|

||||

|

||||

The app will be automatically launched in your browser.

|

||||

Next, if you feel comfortable adding new pipeline, add new `.py` implementation in `libs/ktem/ktem/reasoning/` and later include it in `flowssettings` to enable it on the UI.

|

||||

|

||||

|

||||

#### Custom indexing pipeline

|

||||

|

||||

## Customize your application

|

||||

Check sample implementation in `libs/ktem/ktem/index/file/graph`

|

||||

|

||||

(more instruction WIP).

|

||||

|

||||

## Developer guide

|

||||

|

||||

Please refer to the [Developer Guide](https://cinnamon.github.io/kotaemon/development/)

|

||||

for more details.

|

||||

|

|

|

|||

23

app.py

|

|

@ -1,5 +1,24 @@

|

|||

from ktem.main import App

|

||||

import os

|

||||

|

||||

from theflow.settings import settings as flowsettings

|

||||

|

||||

KH_APP_DATA_DIR = getattr(flowsettings, "KH_APP_DATA_DIR", ".")

|

||||

GRADIO_TEMP_DIR = os.getenv("GRADIO_TEMP_DIR", None)

|

||||

# override GRADIO_TEMP_DIR if it's not set

|

||||

if GRADIO_TEMP_DIR is None:

|

||||

GRADIO_TEMP_DIR = os.path.join(KH_APP_DATA_DIR, "gradio_tmp")

|

||||

os.environ["GRADIO_TEMP_DIR"] = GRADIO_TEMP_DIR

|

||||

|

||||

|

||||

from ktem.main import App # noqa

|

||||

|

||||

app = App()

|

||||

demo = app.make()

|

||||

demo.queue().launch(favicon_path=app._favicon, inbrowser=True)

|

||||

demo.queue().launch(

|

||||

favicon_path=app._favicon,

|

||||

inbrowser=True,

|

||||

allowed_paths=[

|

||||

"libs/ktem/ktem/assets",

|

||||

GRADIO_TEMP_DIR,

|

||||

],

|

||||

)

|

||||

|

|

|

|||

|

|

@ -9,3 +9,6 @@ developers in mind.

|

|||

[User Guide](https://cinnamon.github.io/kotaemon/) |

|

||||

[Developer Guide](https://cinnamon.github.io/kotaemon/development/) |

|

||||

[Feedback](https://github.com/Cinnamon/kotaemon/issues)

|

||||

|

||||

[Dark Mode](?__theme=dark) |

|

||||

[Light Mode](?__theme=light)

|

||||

|

|

|

|||

BIN

docs/images/info-panel-scores.png

Normal file

|

After Width: | Height: | Size: 545 KiB |

BIN

docs/images/models.png

Normal file

|

After Width: | Height: | Size: 136 KiB |

BIN

docs/images/pdf-viewer-setup.png

Normal file

|

After Width: | Height: | Size: 38 KiB |

BIN

docs/images/preview-graph.png

Normal file

|

After Width: | Height: | Size: 288 KiB |

BIN

docs/images/preview.png

Normal file

|

After Width: | Height: | Size: 566 KiB |

|

|

@ -108,8 +108,8 @@ string rather than a string.

|

|||

## Software infrastructure

|

||||

|

||||

| Infra | Access | Schema | Ref |

|

||||

| ---------------- | ------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------- |

|

||||

| SQL table Source | self.\_Source | - id (int): id of the source (auto)<br>- name (str): the name of the file<br>- path (str): the path of the file<br>- size (int): the file size in bytes<br>- text_length (int): the number of characters in the file (default 0)<br>- date_created (datetime): the time the file is created (auto) | This is SQLALchemy ORM class. Can consult |

|

||||

| ---------------- | ------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------- |

|

||||

| SQL table Source | self.\_Source | - id (int): id of the source (auto)<br>- name (str): the name of the file<br>- path (str): the path of the file<br>- size (int): the file size in bytes<br>- note (dict): allow extra optional information about the file<br>- date_created (datetime): the time the file is created (auto) | This is SQLALchemy ORM class. Can consult |

|

||||

| SQL table Index | self.\_Index | - id (int): id of the index entry (auto)<br>- source_id (int): the id of a file in the Source table<br>- target_id: the id of the segment in docstore or vector store<br>- relation_type (str): if the link is "document" or "vector" | This is SQLAlchemy ORM class |

|

||||

| Vector store | self.\_VS | - self.\_VS.add: add the list of embeddings to the vector store (optionally associate metadata and ids)<br>- self.\_VS.delete: delete vector entries based on ids<br>- self.\_VS.query: get embeddings based on embeddings. | kotaemon > storages > vectorstores > BaseVectorStore |

|

||||

| Doc store | self.\_DS | - self.\_DS.add: add the segments to document stores<br>- self.\_DS.get: get the segments based on id<br>- self.\_DS.get_all: get all segments<br>- self.\_DS.delete: delete segments based on id | kotaemon > storages > docstores > base > BaseDocumentStore |

|

||||

|

|

|

|||

|

|

@ -1,5 +1,3 @@

|

|||

# Basic Usage

|

||||

|

||||

## 1. Add your AI models

|

||||

|

||||

|

||||

|

|

@ -63,12 +61,15 @@ AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002 # change to your deplo

|

|||

|

||||

### Local models

|

||||

|

||||

- Pros:

|

||||

Pros:

|

||||

|

||||

- Privacy. Your documents will be stored and process locally.

|

||||

- Choices. There are a wide range of LLMs in terms of size, domain, language to choose

|

||||

from.

|

||||

- Cost. It's free.

|

||||

- Cons:

|

||||

|

||||

Cons:

|

||||

|

||||

- Quality. Local models are much smaller and thus have lower generative quality than

|

||||

paid APIs.

|

||||

- Speed. Local models are deployed using your machine so the processing speed is

|

||||

|

|

@ -136,6 +137,21 @@ Now navigate back to the `Chat` tab. The chat tab is divided into 3 regions:

|

|||

files will be considered during chat.

|

||||

2. Chat Panel

|

||||

- This is where you can chat with the chatbot.

|

||||

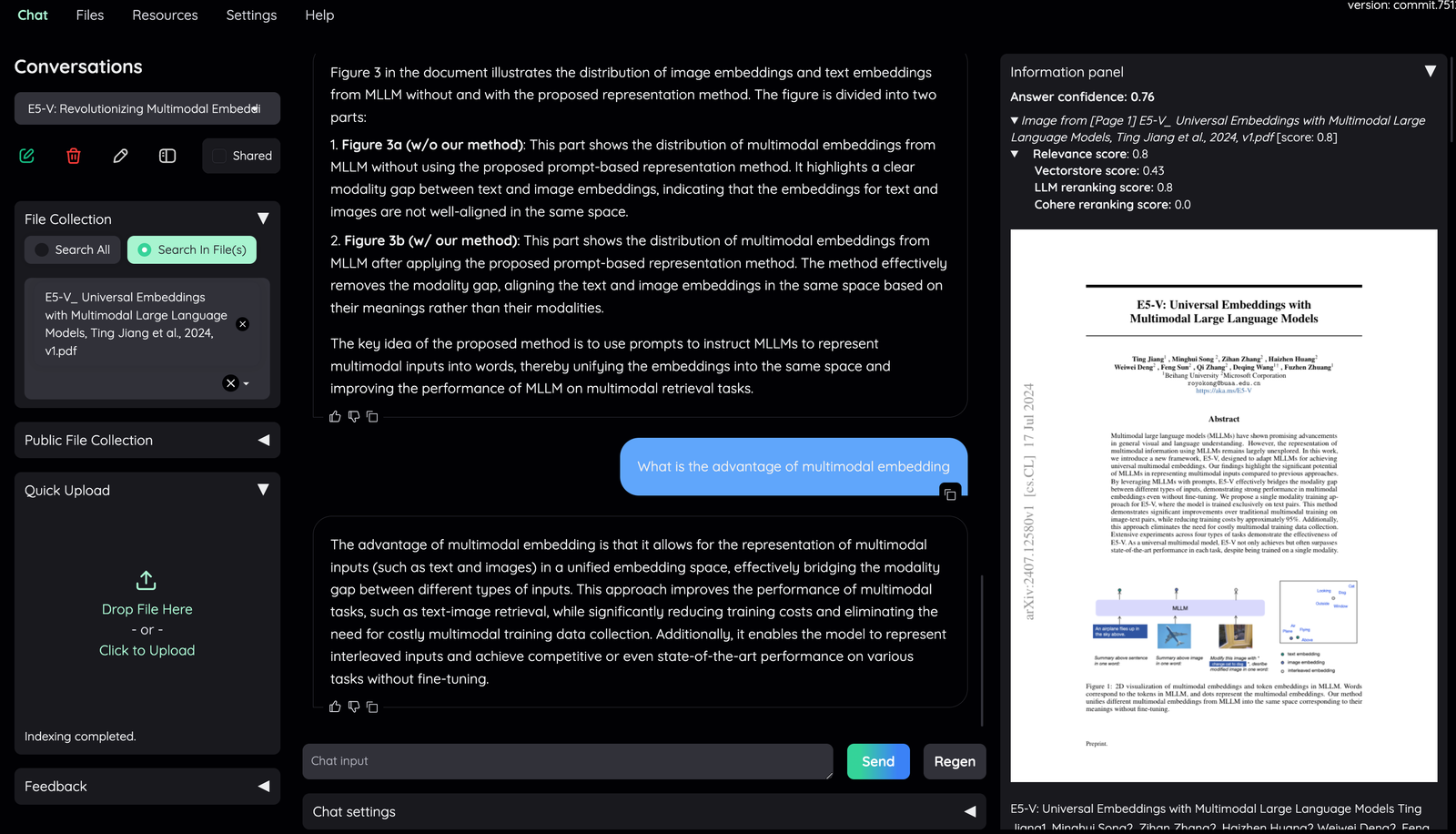

3. Information panel

|

||||

- Supporting information such as the retrieved evidence and reference will be

|

||||

3. Information Panel

|

||||

|

||||

|

||||

|

||||

- Supporting information such as the retrieved evidence and reference will be

|

||||

displayed here.

|

||||

- Direct citation for the answer produced by the LLM is highlighted.

|

||||

- The confidence score of the answer and relevant scores of evidences are displayed to quickly assess the quality of the answer and retrieved content.

|

||||

|

||||

- Meaning of the score displayed:

|

||||

- **Answer confidence**: answer confidence level from the LLM model.

|

||||

- **Relevance score**: overall relevant score between evidence and user question.

|

||||

- **Vectorstore score**: relevant score from vector embedding similarity calculation (show `full-text search` if retrieved from full-text search DB).

|

||||

- **LLM relevant score**: relevant score from LLM model (which judge relevancy between question and evidence using specific prompt).

|

||||

- **Reranking score**: relevant score from Cohere [reranking model](https://cohere.com/rerank).

|

||||

|

||||

Generally, the score quality is `LLM relevant score` > `Reranking score` > `Vectorscore`.

|

||||

By default, overall relevance score is taken directly from LLM relevant score. Evidences are sorted based on their overall relevance score and whether they have citation or not.

|

||||

|

|

|

|||

153

flowsettings.py

|

|

@ -15,7 +15,7 @@ this_dir = Path(this_file).parent

|

|||

# change this if your app use a different name

|

||||

KH_PACKAGE_NAME = "kotaemon_app"

|

||||

|

||||

KH_APP_VERSION = os.environ.get("KH_APP_VERSION", None)

|

||||

KH_APP_VERSION = config("KH_APP_VERSION", "local")

|

||||

if not KH_APP_VERSION:

|

||||

try:

|

||||

# Caution: This might produce the wrong version

|

||||

|

|

@ -33,8 +33,21 @@ KH_APP_DATA_DIR.mkdir(parents=True, exist_ok=True)

|

|||

KH_USER_DATA_DIR = KH_APP_DATA_DIR / "user_data"

|

||||

KH_USER_DATA_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# doc directory

|

||||

KH_DOC_DIR = this_dir / "docs"

|

||||

# markdown output directory

|

||||

KH_MARKDOWN_OUTPUT_DIR = KH_APP_DATA_DIR / "markdown_cache_dir"

|

||||

KH_MARKDOWN_OUTPUT_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# chunks output directory

|

||||

KH_CHUNKS_OUTPUT_DIR = KH_APP_DATA_DIR / "chunks_cache_dir"

|

||||

KH_CHUNKS_OUTPUT_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# zip output directory

|

||||

KH_ZIP_OUTPUT_DIR = KH_APP_DATA_DIR / "zip_cache_dir"

|

||||

KH_ZIP_OUTPUT_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# zip input directory

|

||||

KH_ZIP_INPUT_DIR = KH_APP_DATA_DIR / "zip_cache_dir_in"

|

||||

KH_ZIP_INPUT_DIR.mkdir(parents=True, exist_ok=True)

|

||||

|

||||

# HF models can be big, let's store them in the app data directory so that it's easier

|

||||

# for users to manage their storage.

|

||||

|

|

@ -42,24 +55,30 @@ KH_DOC_DIR = this_dir / "docs"

|

|||

os.environ["HF_HOME"] = str(KH_APP_DATA_DIR / "huggingface")

|

||||

os.environ["HF_HUB_CACHE"] = str(KH_APP_DATA_DIR / "huggingface")

|

||||

|

||||

COHERE_API_KEY = config("COHERE_API_KEY", default="")

|

||||

# doc directory

|

||||

KH_DOC_DIR = this_dir / "docs"

|

||||

|

||||

KH_MODE = "dev"

|

||||

KH_FEATURE_USER_MANAGEMENT = False

|

||||

KH_FEATURE_USER_MANAGEMENT = True

|

||||

KH_USER_CAN_SEE_PUBLIC = None

|

||||

KH_FEATURE_USER_MANAGEMENT_ADMIN = str(

|

||||

config("KH_FEATURE_USER_MANAGEMENT_ADMIN", default="admin")

|

||||

)

|

||||

KH_FEATURE_USER_MANAGEMENT_PASSWORD = str(

|

||||

config("KH_FEATURE_USER_MANAGEMENT_PASSWORD", default="XsdMbe8zKP8KdeE@")

|

||||

config("KH_FEATURE_USER_MANAGEMENT_PASSWORD", default="admin")

|

||||

)

|

||||

KH_ENABLE_ALEMBIC = False

|

||||

KH_DATABASE = f"sqlite:///{KH_USER_DATA_DIR / 'sql.db'}"

|

||||

KH_FILESTORAGE_PATH = str(KH_USER_DATA_DIR / "files")

|

||||

|

||||

KH_DOCSTORE = {

|

||||

"__type__": "kotaemon.storages.SimpleFileDocumentStore",

|

||||

# "__type__": "kotaemon.storages.ElasticsearchDocumentStore",

|

||||

# "__type__": "kotaemon.storages.SimpleFileDocumentStore",

|

||||

"__type__": "kotaemon.storages.LanceDBDocumentStore",

|

||||

"path": str(KH_USER_DATA_DIR / "docstore"),

|

||||

}

|

||||

KH_VECTORSTORE = {

|

||||

# "__type__": "kotaemon.storages.LanceDBVectorStore",

|

||||

"__type__": "kotaemon.storages.ChromaVectorStore",

|

||||

"path": str(KH_USER_DATA_DIR / "vectorstore"),

|

||||

}

|

||||

|

|

@ -83,8 +102,6 @@ if config("AZURE_OPENAI_API_KEY", default="") and config(

|

|||

"timeout": 20,

|

||||

},

|

||||

"default": False,

|

||||

"accuracy": 5,

|

||||

"cost": 5,

|

||||

}

|

||||

if config("AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT", default=""):

|

||||

KH_EMBEDDINGS["azure"] = {

|

||||

|

|

@ -110,71 +127,66 @@ if config("OPENAI_API_KEY", default=""):

|

|||

"base_url": config("OPENAI_API_BASE", default="")

|

||||

or "https://api.openai.com/v1",

|

||||

"api_key": config("OPENAI_API_KEY", default=""),

|

||||

"model": config("OPENAI_CHAT_MODEL", default="") or "gpt-3.5-turbo",

|

||||

"timeout": 10,

|

||||

"model": config("OPENAI_CHAT_MODEL", default="gpt-3.5-turbo"),

|

||||

"timeout": 20,

|

||||

},

|

||||

"default": False,

|

||||

"default": True,

|

||||

}

|

||||

if len(KH_EMBEDDINGS) < 1:

|

||||

KH_EMBEDDINGS["openai"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

||||

"base_url": config("OPENAI_API_BASE", default="")

|

||||

or "https://api.openai.com/v1",

|

||||

"base_url": config("OPENAI_API_BASE", default="https://api.openai.com/v1"),

|

||||

"api_key": config("OPENAI_API_KEY", default=""),

|

||||

"model": config(

|

||||

"OPENAI_EMBEDDINGS_MODEL", default="text-embedding-ada-002"

|

||||

)

|

||||

or "text-embedding-ada-002",

|

||||

),

|

||||

"timeout": 10,

|

||||

},

|

||||

"default": False,

|

||||

}

|

||||

|

||||

if config("LOCAL_MODEL", default=""):

|

||||

KH_LLMS["local"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.llms.EndpointChatLLM",

|

||||

"endpoint_url": "http://localhost:31415/v1/chat/completions",

|

||||

},

|

||||

"default": False,

|

||||

"cost": 0,

|

||||

}

|

||||

if len(KH_EMBEDDINGS) < 1:

|

||||

KH_EMBEDDINGS["local"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.EndpointEmbeddings",

|

||||

"endpoint_url": "http://localhost:31415/v1/embeddings",

|

||||

},

|

||||

"default": False,

|

||||

"cost": 0,

|

||||

}

|

||||

|

||||

if len(KH_EMBEDDINGS) < 1:

|

||||

KH_EMBEDDINGS["local-bge-base-en-v1.5"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.FastEmbedEmbeddings",

|

||||

"model_name": "BAAI/bge-base-en-v1.5",

|

||||

"context_length": 8191,

|

||||

},

|

||||

"default": True,

|

||||

}

|

||||

|

||||

KH_REASONINGS = ["ktem.reasoning.simple.FullQAPipeline"]

|

||||

if config("LOCAL_MODEL", default=""):

|

||||

KH_LLMS["ollama"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.llms.ChatOpenAI",

|

||||

"base_url": "http://localhost:11434/v1/",

|

||||

"model": config("LOCAL_MODEL", default="llama3.1:8b"),

|

||||

},

|

||||

"default": False,

|

||||

}

|

||||

KH_EMBEDDINGS["ollama"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.OpenAIEmbeddings",

|

||||

"base_url": "http://localhost:11434/v1/",

|

||||

"model": config("LOCAL_MODEL_EMBEDDINGS", default="nomic-embed-text"),

|

||||

},

|

||||

"default": False,

|

||||

}

|

||||

|

||||

KH_EMBEDDINGS["local-bge-en"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.FastEmbedEmbeddings",

|

||||

"model_name": "BAAI/bge-base-en-v1.5",

|

||||

},

|

||||

"default": False,

|

||||

}

|

||||

|

||||

KH_REASONINGS = [

|

||||

"ktem.reasoning.simple.FullQAPipeline",

|

||||

"ktem.reasoning.simple.FullDecomposeQAPipeline",

|

||||

"ktem.reasoning.react.ReactAgentPipeline",

|

||||

"ktem.reasoning.rewoo.RewooAgentPipeline",

|

||||

]

|

||||

KH_REASONINGS_USE_MULTIMODAL = False

|

||||

KH_VLM_ENDPOINT = "{0}/openai/deployments/{1}/chat/completions?api-version={2}".format(

|

||||

config("AZURE_OPENAI_ENDPOINT", default=""),

|

||||

config("OPENAI_VISION_DEPLOYMENT_NAME", default="gpt-4-vision"),

|

||||

config("OPENAI_VISION_DEPLOYMENT_NAME", default="gpt-4o"),

|

||||

config("OPENAI_API_VERSION", default=""),

|

||||

)

|

||||

|

||||

|

||||

SETTINGS_APP = {

|

||||

"lang": {

|

||||

"name": "Language",

|

||||

"value": "en",

|

||||

"choices": [("English", "en"), ("Japanese", "ja")],

|

||||

"component": "dropdown",

|

||||

}

|

||||

}

|

||||

SETTINGS_APP: dict[str, dict] = {}

|

||||

|

||||

|

||||

SETTINGS_REASONING = {

|

||||

|

|

@ -187,17 +199,42 @@ SETTINGS_REASONING = {

|

|||

"lang": {

|

||||

"name": "Language",

|

||||

"value": "en",

|

||||

"choices": [("English", "en"), ("Japanese", "ja")],

|

||||

"choices": [("English", "en"), ("Japanese", "ja"), ("Vietnamese", "vi")],

|

||||

"component": "dropdown",

|

||||

},

|

||||

"max_context_length": {

|

||||

"name": "Max context length (LLM)",

|

||||

"value": 32000,

|

||||

"component": "number",

|

||||

},

|

||||

}

|

||||

|

||||

|

||||

KH_INDEX_TYPES = ["ktem.index.file.FileIndex"]

|

||||

KH_INDEX_TYPES = [

|

||||

"ktem.index.file.FileIndex",

|

||||

"ktem.index.file.graph.GraphRAGIndex",

|

||||

]

|

||||

KH_INDICES = [

|

||||

{

|

||||

"name": "File",

|

||||

"config": {},

|

||||

"config": {

|

||||

"supported_file_types": (

|

||||

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

||||

".pptx, .csv, .html, .mhtml, .txt, .zip"

|

||||

),

|

||||

"private": False,

|

||||

},

|

||||

"index_type": "ktem.index.file.FileIndex",

|

||||

},

|

||||

{

|

||||

"name": "GraphRAG",

|

||||

"config": {

|

||||

"supported_file_types": (

|

||||

".png, .jpeg, .jpg, .tiff, .tif, .pdf, .xls, .xlsx, .doc, .docx, "

|

||||

".pptx, .csv, .html, .mhtml, .txt, .zip"

|

||||

),

|

||||

"private": False,

|

||||

},

|

||||

"index_type": "ktem.index.file.graph.GraphRAGIndex",

|

||||

},

|

||||

]

|

||||

|

|

|

|||

|

|

@ -39,16 +39,11 @@ class ReactAgent(BaseAgent):

|

|||

)

|

||||

max_iterations: int = 5

|

||||

strict_decode: bool = False

|

||||

trim_func: TokenSplitter = TokenSplitter.withx(

|

||||

chunk_size=800,

|

||||

chunk_overlap=0,

|

||||

separator=" ",

|

||||

tokenizer=partial(

|

||||

tiktoken.encoding_for_model("gpt-3.5-turbo").encode,

|

||||

allowed_special=set(),

|

||||

disallowed_special="all",

|

||||

),

|

||||

max_context_length: int = Param(

|

||||

default=3000,

|

||||

help="Max context length for each tool output.",

|

||||

)

|

||||

trim_func: TokenSplitter | None = None

|

||||

|

||||

def _compose_plugin_description(self) -> str:

|

||||

"""

|

||||

|

|

@ -149,14 +144,28 @@ class ReactAgent(BaseAgent):

|

|||

function_map[plugin.name] = plugin

|

||||

return function_map

|

||||

|

||||

def _trim(self, text: str) -> str:

|

||||

def _trim(self, text: str | Document) -> str:

|

||||

"""

|

||||

Trim the text to the maximum token length.

|

||||

"""

|

||||

evidence_trim_func = (

|

||||

self.trim_func

|

||||

if self.trim_func

|

||||

else TokenSplitter(

|

||||

chunk_size=self.max_context_length,

|

||||

chunk_overlap=0,

|

||||

separator=" ",

|

||||

tokenizer=partial(

|

||||

tiktoken.encoding_for_model("gpt-3.5-turbo").encode,

|

||||

allowed_special=set(),

|

||||

disallowed_special="all",

|

||||

),

|

||||

)

|

||||

)

|

||||

if isinstance(text, str):

|

||||

texts = self.trim_func([Document(text=text)])

|

||||

texts = evidence_trim_func([Document(text=text)])

|

||||

elif isinstance(text, Document):

|

||||

texts = self.trim_func([text])

|

||||

texts = evidence_trim_func([text])

|

||||

else:

|

||||

raise ValueError("Invalid text type to trim")

|

||||

trim_text = texts[0].text

|

||||

|

|

|

|||

|

|

@ -39,16 +39,11 @@ class RewooAgent(BaseAgent):

|

|||

examples: dict[str, str | list[str]] = Param(

|

||||

default_callback=lambda _: {}, help="Examples to be used in the agent."

|

||||

)

|

||||

trim_func: TokenSplitter = TokenSplitter.withx(

|

||||

chunk_size=3000,

|

||||

chunk_overlap=0,

|

||||

separator=" ",

|

||||

tokenizer=partial(

|

||||

tiktoken.encoding_for_model("gpt-3.5-turbo").encode,

|

||||

allowed_special=set(),

|

||||

disallowed_special="all",

|

||||

),

|

||||

max_context_length: int = Param(

|

||||

default=3000,

|

||||

help="Max context length for each tool output.",

|

||||

)

|

||||

trim_func: TokenSplitter | None = None

|

||||

|

||||

@Node.auto(depends_on=["planner_llm", "plugins", "prompt_template", "examples"])

|

||||

def planner(self):

|

||||

|

|

@ -248,8 +243,22 @@ class RewooAgent(BaseAgent):

|

|||

return p

|

||||

|

||||

def _trim_evidence(self, evidence: str):

|

||||

evidence_trim_func = (

|

||||

self.trim_func

|

||||

if self.trim_func

|

||||

else TokenSplitter(

|

||||

chunk_size=self.max_context_length,

|

||||

chunk_overlap=0,

|

||||

separator=" ",

|

||||

tokenizer=partial(

|

||||

tiktoken.encoding_for_model("gpt-3.5-turbo").encode,

|

||||

allowed_special=set(),

|

||||

disallowed_special="all",

|

||||

),

|

||||

)

|

||||

)

|

||||

if evidence:

|

||||

texts = self.trim_func([Document(text=evidence)])

|

||||

texts = evidence_trim_func([Document(text=evidence)])

|

||||

evidence = texts[0].text

|

||||

logging.info(f"len (trimmed): {len(evidence)}")

|

||||

return evidence

|

||||

|

|

@ -317,6 +326,14 @@ class RewooAgent(BaseAgent):

|

|||

)

|

||||

|

||||

print("Planner output:", planner_text_output)

|

||||

# output planner to info panel

|

||||

yield AgentOutput(

|

||||

text="",

|

||||

agent_type=self.agent_type,

|

||||

status="thinking",

|

||||

intermediate_steps=[{"planner_log": planner_text_output}],

|

||||

)

|

||||

|

||||

# Work

|

||||

worker_evidences, plugin_cost, plugin_token = self._get_worker_evidence(

|

||||

planner_evidences, evidence_level

|

||||

|

|

@ -326,7 +343,9 @@ class RewooAgent(BaseAgent):

|

|||

worker_log += f"{plan}: {plans[plan]}\n"

|

||||

current_progress = f"{plan}: {plans[plan]}\n"

|

||||

for e in plan_to_es[plan]:

|

||||

worker_log += f"#Action: {planner_evidences.get(e, None)}\n"

|

||||

worker_log += f"{e}: {worker_evidences[e]}\n"

|

||||

current_progress += f"#Action: {planner_evidences.get(e, None)}\n"

|

||||

current_progress += f"{e}: {worker_evidences[e]}\n"

|

||||

|

||||

yield AgentOutput(

|

||||

|

|

|

|||

|

|

@ -1,7 +1,7 @@

|

|||

from typing import AnyStr, Optional, Type

|

||||

from urllib.error import HTTPError

|

||||

|

||||

from langchain.utilities import SerpAPIWrapper

|

||||

from langchain_community.utilities import SerpAPIWrapper

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from .base import BaseTool

|

||||

|

|

|

|||

|

|

@ -22,12 +22,16 @@ class LLMTool(BaseTool):

|

|||

)

|

||||

llm: BaseLLM

|

||||

args_schema: Optional[Type[BaseModel]] = LLMArgs

|

||||

dummy_mode: bool = True

|

||||

|

||||

def _run_tool(self, query: AnyStr) -> str:

|

||||

output = None

|

||||

try:

|

||||

if not self.dummy_mode:

|

||||

response = self.llm(query)

|

||||

else:

|

||||

response = None

|

||||

except ValueError:

|

||||

raise ToolException("LLM Tool call failed")

|

||||

output = response.text

|

||||

output = response.text if response else "<->"

|

||||

return output

|

||||

|

|

|

|||

|

|

@ -5,8 +5,8 @@ from typing import TYPE_CHECKING, Any, Literal, Optional, TypeVar

|

|||

from langchain.schema.messages import AIMessage as LCAIMessage

|

||||

from langchain.schema.messages import HumanMessage as LCHumanMessage

|

||||

from langchain.schema.messages import SystemMessage as LCSystemMessage

|

||||

from llama_index.bridge.pydantic import Field

|

||||

from llama_index.schema import Document as BaseDocument

|

||||

from llama_index.core.bridge.pydantic import Field

|

||||

from llama_index.core.schema import Document as BaseDocument

|

||||

|

||||

if TYPE_CHECKING:

|

||||

from haystack.schema import Document as HaystackDocument

|

||||

|

|

@ -38,7 +38,7 @@ class Document(BaseDocument):

|

|||

|

||||

content: Any = None

|

||||

source: Optional[str] = None

|

||||

channel: Optional[Literal["chat", "info", "index", "debug"]] = None

|

||||

channel: Optional[Literal["chat", "info", "index", "debug", "plot"]] = None

|

||||

|

||||

def __init__(self, content: Optional[Any] = None, *args, **kwargs):

|

||||

if content is None:

|

||||

|

|

@ -140,6 +140,7 @@ class LLMInterface(AIMessage):

|

|||

total_cost: float = 0

|

||||

logits: list[list[float]] = Field(default_factory=list)

|

||||

messages: list[AIMessage] = Field(default_factory=list)

|

||||

logprobs: list[float] = []

|

||||

|

||||

|

||||

class ExtractorOutput(Document):

|

||||

|

|

|

|||

|

|

@ -133,9 +133,7 @@ def construct_chat_ui(

|

|||

label="Output file", show_label=True, height=100

|

||||

)

|

||||

export_btn = gr.Button("Export")

|

||||

export_btn.click(

|

||||

func_export_to_excel, inputs=None, outputs=exported_file

|

||||

)

|

||||

export_btn.click(func_export_to_excel, inputs=[], outputs=exported_file)

|

||||

|

||||

with gr.Row():

|

||||

with gr.Column():

|

||||

|

|

|

|||

|

|

@ -91,7 +91,7 @@ def construct_pipeline_ui(

|

|||

save_btn.click(func_save, inputs=params, outputs=history_dataframe)

|

||||

load_params_btn = gr.Button("Reload params")

|

||||

load_params_btn.click(

|

||||

func_load_params, inputs=None, outputs=history_dataframe

|

||||

func_load_params, inputs=[], outputs=history_dataframe

|

||||

)

|

||||

history_dataframe.render()

|

||||

history_dataframe.select(

|

||||

|

|

@ -103,7 +103,7 @@ def construct_pipeline_ui(

|

|||

export_btn = gr.Button(

|

||||

"Export (Result will be in Exported file next to Output)"

|

||||

)

|

||||

export_btn.click(func_export, inputs=None, outputs=exported_file)

|

||||

export_btn.click(func_export, inputs=[], outputs=exported_file)

|

||||

with gr.Row():

|

||||

with gr.Column():

|

||||

if params:

|

||||

|

|

|

|||

|

|

@ -1,5 +1,15 @@

|

|||

from itertools import islice

|

||||

from typing import Optional

|

||||

|

||||

import numpy as np

|

||||

import openai

|

||||

import tiktoken

|

||||

from tenacity import (

|

||||

retry,

|

||||

retry_if_not_exception_type,

|

||||

stop_after_attempt,

|

||||

wait_random_exponential,

|

||||

)

|

||||

from theflow.utils.modules import import_dotted_string

|

||||

|

||||

from kotaemon.base import Param

|

||||

|

|

@ -7,6 +17,24 @@ from kotaemon.base import Param

|

|||

from .base import BaseEmbeddings, Document, DocumentWithEmbedding

|

||||

|

||||

|

||||

def split_text_by_chunk_size(text: str, chunk_size: int) -> list[list[int]]:

|

||||

"""Split the text into chunks of a given size

|

||||

|

||||

Args:

|

||||

text: text to split

|

||||

chunk_size: size of each chunk

|

||||

|

||||

Returns:

|

||||

list of chunks (as tokens)

|

||||

"""

|

||||

encoding = tiktoken.get_encoding("cl100k_base")

|

||||

tokens = iter(encoding.encode(text))

|

||||

result = []

|

||||

while chunk := list(islice(tokens, chunk_size)):

|

||||

result.append(chunk)

|

||||

return result

|

||||

|

||||

|

||||

class BaseOpenAIEmbeddings(BaseEmbeddings):

|

||||

"""Base interface for OpenAI embedding model, using the openai library.

|

||||

|

||||

|

|

@ -32,6 +60,9 @@ class BaseOpenAIEmbeddings(BaseEmbeddings):

|

|||

"Only supported in `text-embedding-3` and later models."

|

||||

),

|

||||

)

|

||||

context_length: Optional[int] = Param(

|

||||

None, help="The maximum context length of the embedding model"

|

||||

)

|

||||

|

||||

@Param.auto(depends_on=["max_retries"])

|

||||

def max_retries_(self):

|

||||

|

|

@ -56,16 +87,42 @@ class BaseOpenAIEmbeddings(BaseEmbeddings):

|

|||

def invoke(

|

||||

self, text: str | list[str] | Document | list[Document], *args, **kwargs

|

||||

) -> list[DocumentWithEmbedding]:

|

||||

input_ = self.prepare_input(text)

|

||||

input_doc = self.prepare_input(text)

|

||||

client = self.prepare_client(async_version=False)

|

||||

resp = self.openai_response(

|

||||

client, input=[_.text if _.text else " " for _ in input_], **kwargs

|

||||

).dict()

|

||||

output_ = sorted(resp["data"], key=lambda x: x["index"])

|

||||

return [

|

||||

DocumentWithEmbedding(embedding=o["embedding"], content=i)

|

||||

for i, o in zip(input_, output_)

|

||||

|

||||

input_: list[str | list[int]] = []

|

||||

splitted_indices = {}

|

||||

for idx, text in enumerate(input_doc):

|

||||

if self.context_length:

|

||||

chunks = split_text_by_chunk_size(text.text or " ", self.context_length)

|

||||

splitted_indices[idx] = (len(input_), len(input_) + len(chunks))

|

||||

input_.extend(chunks)

|

||||

else:

|

||||

splitted_indices[idx] = (len(input_), len(input_) + 1)

|

||||

input_.append(text.text)

|

||||

|

||||

resp = self.openai_response(client, input=input_, **kwargs).dict()

|

||||

output_ = list(sorted(resp["data"], key=lambda x: x["index"]))

|

||||

|

||||

output = []

|

||||

for idx, doc in enumerate(input_doc):

|

||||

embs = output_[splitted_indices[idx][0] : splitted_indices[idx][1]]

|

||||

if len(embs) == 1:

|

||||

output.append(

|

||||

DocumentWithEmbedding(embedding=embs[0]["embedding"], content=doc)

|

||||

)

|

||||

continue

|

||||

|

||||

chunk_lens = [

|

||||

len(_)

|

||||

for _ in input_[splitted_indices[idx][0] : splitted_indices[idx][1]]

|

||||

]

|

||||

vs: list[list[float]] = [_["embedding"] for _ in embs]

|

||||

emb = np.average(vs, axis=0, weights=chunk_lens)

|

||||

emb = emb / np.linalg.norm(emb)

|

||||

output.append(DocumentWithEmbedding(embedding=emb.tolist(), content=doc))

|

||||

|

||||

return output

|

||||

|

||||

async def ainvoke(

|

||||

self, text: str | list[str] | Document | list[Document], *args, **kwargs

|

||||

|

|

@ -118,6 +175,13 @@ class OpenAIEmbeddings(BaseOpenAIEmbeddings):

|

|||

|

||||

return OpenAI(**params)

|

||||

|

||||

@retry(

|

||||

retry=retry_if_not_exception_type(

|

||||

(openai.NotFoundError, openai.BadRequestError)

|

||||

),

|

||||

wait=wait_random_exponential(min=1, max=40),

|

||||

stop=stop_after_attempt(6),

|

||||

)

|

||||

def openai_response(self, client, **kwargs):

|

||||

"""Get the openai response"""

|

||||

params: dict = {

|

||||

|

|

@ -174,6 +238,13 @@ class AzureOpenAIEmbeddings(BaseOpenAIEmbeddings):

|

|||

|

||||

return AzureOpenAI(**params)

|

||||

|

||||

@retry(

|

||||

retry=retry_if_not_exception_type(

|

||||

(openai.NotFoundError, openai.BadRequestError)

|

||||

),

|

||||

wait=wait_random_exponential(min=1, max=40),

|

||||

stop=stop_after_attempt(6),

|

||||

)

|

||||

def openai_response(self, client, **kwargs):

|

||||

"""Get the openai response"""

|

||||

params: dict = {

|

||||

|

|

|

|||

|

|

@ -3,7 +3,7 @@ from __future__ import annotations

|

|||

from abc import abstractmethod

|

||||

from typing import Any, Type

|

||||

|

||||

from llama_index.node_parser.interface import NodeParser

|

||||

from llama_index.core.node_parser.interface import NodeParser

|

||||

|

||||

from kotaemon.base import BaseComponent, Document, RetrievedDocument

|

||||

|

||||

|

|

@ -32,7 +32,7 @@ class LlamaIndexDocTransformerMixin:

|

|||

Example:

|

||||

class TokenSplitter(LlamaIndexMixin, BaseSplitter):

|

||||

def _get_li_class(self):

|

||||

from llama_index.text_splitter import TokenTextSplitter

|

||||

from llama_index.core.text_splitter import TokenTextSplitter

|

||||

return TokenTextSplitter

|

||||

|

||||

To use this mixin, please:

|

||||

|

|

|

|||

|

|

@ -15,7 +15,7 @@ class TitleExtractor(LlamaIndexDocTransformerMixin, BaseDocParser):

|

|||

super().__init__(llm=llm, nodes=nodes, **params)

|

||||

|

||||

def _get_li_class(self):

|

||||

from llama_index.extractors import TitleExtractor

|

||||

from llama_index.core.extractors import TitleExtractor

|

||||

|

||||

return TitleExtractor

|

||||

|

||||

|

|

@ -30,6 +30,6 @@ class SummaryExtractor(LlamaIndexDocTransformerMixin, BaseDocParser):

|

|||

super().__init__(llm=llm, summaries=summaries, **params)

|

||||

|

||||

def _get_li_class(self):

|

||||

from llama_index.extractors import SummaryExtractor

|

||||

from llama_index.core.extractors import SummaryExtractor

|

||||

|

||||

return SummaryExtractor

|

||||

|

|

|

|||

|

|

@ -1,27 +1,42 @@

|

|||

from pathlib import Path

|

||||

from typing import Type

|

||||

|

||||

from llama_index.readers import PDFReader

|

||||

from llama_index.readers.base import BaseReader

|

||||

from decouple import config

|

||||

from llama_index.core.readers.base import BaseReader

|

||||

from theflow.settings import settings as flowsettings

|

||||

|

||||

from kotaemon.base import BaseComponent, Document, Param

|

||||

from kotaemon.indices.extractors import BaseDocParser

|

||||

from kotaemon.indices.splitters import BaseSplitter, TokenSplitter

|

||||

from kotaemon.loaders import (

|

||||

AdobeReader,

|

||||

AzureAIDocumentIntelligenceLoader,

|

||||

DirectoryReader,

|

||||

HtmlReader,

|

||||

MathpixPDFReader,

|

||||

MhtmlReader,

|

||||

OCRReader,

|

||||

PandasExcelReader,

|

||||

PDFThumbnailReader,

|

||||

UnstructuredReader,

|

||||

)

|

||||

|

||||

unstructured = UnstructuredReader()

|

||||

adobe_reader = AdobeReader()

|

||||

azure_reader = AzureAIDocumentIntelligenceLoader(

|

||||

endpoint=str(config("AZURE_DI_ENDPOINT", default="")),

|

||||

credential=str(config("AZURE_DI_CREDENTIAL", default="")),

|

||||

cache_dir=getattr(flowsettings, "KH_MARKDOWN_OUTPUT_DIR", None),

|

||||

)

|

||||

adobe_reader.vlm_endpoint = azure_reader.vlm_endpoint = getattr(

|

||||

flowsettings, "KH_VLM_ENDPOINT", ""

|

||||

)

|

||||

|

||||

|

||||

KH_DEFAULT_FILE_EXTRACTORS: dict[str, BaseReader] = {

|

||||

".xlsx": PandasExcelReader(),

|

||||

".docx": unstructured,

|

||||

".pptx": unstructured,

|

||||

".xls": unstructured,

|

||||

".doc": unstructured,

|

||||

".html": HtmlReader(),

|

||||

|

|

@ -31,7 +46,7 @@ KH_DEFAULT_FILE_EXTRACTORS: dict[str, BaseReader] = {

|

|||

".jpg": unstructured,

|

||||

".tiff": unstructured,

|

||||

".tif": unstructured,

|

||||

".pdf": PDFReader(),

|

||||

".pdf": PDFThumbnailReader(),

|

||||

}

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -103,7 +103,9 @@ class CitationPipeline(BaseComponent):

|

|||

print("CitationPipeline: invoking LLM")

|

||||

llm_output = self.get_from_path("llm").invoke(messages, **llm_kwargs)

|

||||

print("CitationPipeline: finish invoking LLM")

|

||||

if not llm_output.messages:

|

||||

if not llm_output.messages or not llm_output.additional_kwargs.get(

|

||||

"tool_calls"

|

||||

):

|

||||

return None

|

||||

function_output = llm_output.additional_kwargs["tool_calls"][0]["function"][

|

||||

"arguments"

|

||||

|

|

|

|||

|

|

@ -1,5 +1,13 @@

|

|||

from .base import BaseReranking

|

||||

from .cohere import CohereReranking

|

||||

from .llm import LLMReranking

|

||||

from .llm_scoring import LLMScoring

|

||||

from .llm_trulens import LLMTrulensScoring

|

||||

|

||||

__all__ = ["CohereReranking", "LLMReranking", "BaseReranking"]

|

||||

__all__ = [

|

||||

"CohereReranking",

|

||||

"LLMReranking",

|

||||

"LLMScoring",

|

||||

"BaseReranking",

|

||||

"LLMTrulensScoring",

|

||||

]

|

||||

|

|

|

|||

|

|

@ -1,6 +1,6 @@

|

|||

from __future__ import annotations

|

||||

|

||||

import os

|

||||

from decouple import config

|

||||

|

||||

from kotaemon.base import Document

|

||||

|

||||

|

|

@ -9,8 +9,7 @@ from .base import BaseReranking

|

|||

|

||||

class CohereReranking(BaseReranking):

|

||||

model_name: str = "rerank-multilingual-v2.0"

|

||||

cohere_api_key: str = os.environ.get("COHERE_API_KEY", "")

|

||||

top_k: int = 1

|

||||

cohere_api_key: str = config("COHERE_API_KEY", "")

|

||||

|

||||