Treat index id as auto-generated field (#27)

* Treat index id as auto-generated field * fix Can't create index: KeyError: 'embedding' #28 * udpate docs * Update requirement * Use lighter default local embedding model --------- Co-authored-by: ian <ian@cinnamon.is>

This commit is contained in:

committed by

GitHub

GitHub

parent

66905d39c4

commit

917fb0a082

@@ -1,6 +1,8 @@

|

||||

# kotaemon

|

||||

|

||||

[Documentation](https://cinnamon.github.io/kotaemon/)

|

||||

|

||||

|

||||

[User Guide](https://cinnamon.github.io/kotaemon/) | [Developer Guide](https://cinnamon.github.io/kotaemon/development/)

|

||||

|

||||

[](https://www.python.org/downloads/release/python-31013/)

|

||||

[](https://github.com/psf/black)

|

||||

|

||||

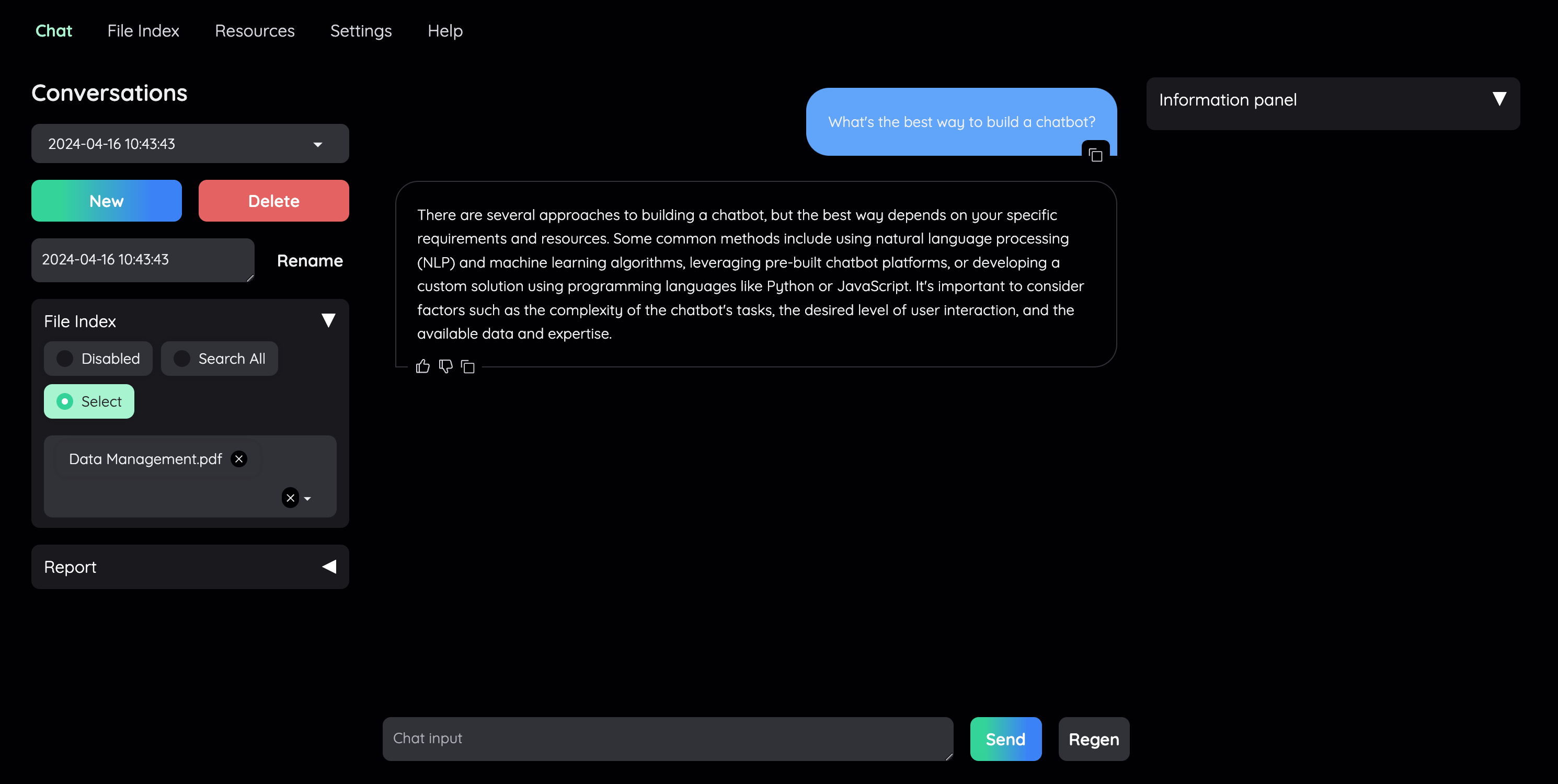

BIN

docs/images/chat-tab-demo.png

Normal file

BIN

docs/images/chat-tab-demo.png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 364 KiB |

@@ -1,8 +1,9 @@

|

||||

# Getting Started with Kotaemon

|

||||

|

||||

This page is intended for end users who want to use the `kotaemon` tool for Question

|

||||

Answering on local documents. If you are a developer who wants contribute to the

|

||||

project, please visit the [development](development/index.md) page.

|

||||

|

||||

|

||||

This page is intended for **end users** who want to use the `kotaemon` tool for Question

|

||||

Answering on local documents. If you are a **developer** who wants contribute to the project, please visit the [development](development/index.md) page.

|

||||

|

||||

## Download

|

||||

|

||||

@@ -18,8 +19,7 @@ Download and upzip the latest version of `kotaemon` by clicking this

|

||||

2. Enable All Applications and choose Terminal.

|

||||

3. NOTE: If you always want to open that file with Terminal, then check Always Open With.

|

||||

4. From now on, double click on your file and it should work.

|

||||

- Linux: `run_linux.sh`. If you are using Linux, you would know how to run a bash

|

||||

script, right ?

|

||||

- Linux: `run_linux.sh`. If you are using Linux, you would know how to run a bash script, right ?

|

||||

2. After the installation, the installer will ask to launch the ktem's UI, answer to continue.

|

||||

3. If launched, the application will be open automatically in your browser.

|

||||

|

||||

|

||||

@@ -119,10 +119,10 @@ if config("LOCAL_MODEL", default=""):

|

||||

}

|

||||

|

||||

if len(KH_EMBEDDINGS) < 1:

|

||||

KH_EMBEDDINGS["local-mxbai-large-v1"] = {

|

||||

KH_EMBEDDINGS["local-bge-base-en-v1.5"] = {

|

||||

"spec": {

|

||||

"__type__": "kotaemon.embeddings.FastEmbedEmbeddings",

|

||||

"model_name": "mixedbread-ai/mxbai-embed-large-v1",

|

||||

"model_name": "BAAI/bge-base-en-v1.5",

|

||||

},

|

||||

"default": True,

|

||||

}

|

||||

@@ -164,7 +164,6 @@ SETTINGS_REASONING = {

|

||||

KH_INDEX_TYPES = ["ktem.index.file.FileIndex"]

|

||||

KH_INDICES = [

|

||||

{

|

||||

"id": 1,

|

||||

"name": "File",

|

||||

"config": {},

|

||||

"index_type": "ktem.index.file.FileIndex",

|

||||

|

||||

@@ -196,4 +196,4 @@ class EmbeddingManager:

|

||||

return {vendor.__qualname__: vendor for vendor in self._vendors}

|

||||

|

||||

|

||||

embeddings = EmbeddingManager()

|

||||

embedding_models_manager = EmbeddingManager()

|

||||

|

||||

@@ -5,7 +5,7 @@ import pandas as pd

|

||||

import yaml

|

||||

from ktem.app import BasePage

|

||||

|

||||

from .manager import embeddings

|

||||

from .manager import embedding_models_manager

|

||||

|

||||

|

||||

def format_description(cls):

|

||||

@@ -118,12 +118,12 @@ class EmbeddingManagement(BasePage):

|

||||

outputs=[self.emb_list],

|

||||

)

|

||||

self._app.app.load(

|

||||

lambda: gr.update(choices=list(embeddings.vendors().keys())),

|

||||

lambda: gr.update(choices=list(embedding_models_manager.vendors().keys())),

|

||||

outputs=[self.emb_choices],

|

||||

)

|

||||

|

||||

def on_emb_vendor_change(self, vendor):

|

||||

vendor = embeddings.vendors()[vendor]

|

||||

vendor = embedding_models_manager.vendors()[vendor]

|

||||

|

||||

required: dict = {}

|

||||

desc = vendor.describe()

|

||||

@@ -224,12 +224,12 @@ class EmbeddingManagement(BasePage):

|

||||

try:

|

||||

spec = yaml.safe_load(spec)

|

||||

spec["__type__"] = (

|

||||

embeddings.vendors()[choices].__module__

|

||||

embedding_models_manager.vendors()[choices].__module__

|

||||

+ "."

|

||||

+ embeddings.vendors()[choices].__qualname__

|

||||

+ embedding_models_manager.vendors()[choices].__qualname__

|

||||

)

|

||||

|

||||

embeddings.add(name, spec=spec, default=default)

|

||||

embedding_models_manager.add(name, spec=spec, default=default)

|

||||

gr.Info(f'Create Embedding model "{name}" successfully')

|

||||

except Exception as e:

|

||||

raise gr.Error(f"Failed to create Embedding model {name}: {e}")

|

||||

@@ -237,7 +237,7 @@ class EmbeddingManagement(BasePage):

|

||||

def list_embeddings(self):

|

||||

"""List the Embedding models"""

|

||||

items = []

|

||||

for item in embeddings.info().values():

|

||||

for item in embedding_models_manager.info().values():

|

||||

record = {}

|

||||

record["name"] = item["name"]

|

||||

record["vendor"] = item["spec"].get("__type__", "-").split(".")[-1]

|

||||

@@ -280,9 +280,9 @@ class EmbeddingManagement(BasePage):

|

||||

btn_delete_yes = gr.update(visible=False)

|

||||

btn_delete_no = gr.update(visible=False)

|

||||

|

||||

info = deepcopy(embeddings.info()[selected_emb_name])

|

||||

info = deepcopy(embedding_models_manager.info()[selected_emb_name])

|

||||

vendor_str = info["spec"].pop("__type__", "-").split(".")[-1]

|

||||

vendor = embeddings.vendors()[vendor_str]

|

||||

vendor = embedding_models_manager.vendors()[vendor_str]

|

||||

|

||||

edit_spec = yaml.dump(info["spec"])

|

||||

edit_spec_desc = format_description(vendor)

|

||||

@@ -309,15 +309,19 @@ class EmbeddingManagement(BasePage):

|

||||

def save_emb(self, selected_emb_name, default, spec):

|

||||

try:

|

||||

spec = yaml.safe_load(spec)

|

||||

spec["__type__"] = embeddings.info()[selected_emb_name]["spec"]["__type__"]

|

||||

embeddings.update(selected_emb_name, spec=spec, default=default)

|

||||

spec["__type__"] = embedding_models_manager.info()[selected_emb_name][

|

||||

"spec"

|

||||

]["__type__"]

|

||||

embedding_models_manager.update(

|

||||

selected_emb_name, spec=spec, default=default

|

||||

)

|

||||

gr.Info(f'Save Embedding model "{selected_emb_name}" successfully')

|

||||

except Exception as e:

|

||||

gr.Error(f'Failed to save Embedding model "{selected_emb_name}": {e}')

|

||||

|

||||

def delete_emb(self, selected_emb_name):

|

||||

try:

|

||||

embeddings.delete(selected_emb_name)

|

||||

embedding_models_manager.delete(selected_emb_name)

|

||||

except Exception as e:

|

||||

gr.Error(f'Failed to delete Embedding model "{selected_emb_name}": {e}')

|

||||

return selected_emb_name

|

||||

|

||||

@@ -293,10 +293,10 @@ class FileIndex(BaseIndex):

|

||||

|

||||

@classmethod

|

||||

def get_admin_settings(cls):

|

||||

from ktem.embeddings.manager import embeddings

|

||||

from ktem.embeddings.manager import embedding_models_manager

|

||||

|

||||

embedding_default = embeddings.get_default_name()

|

||||

embedding_choices = list(embeddings.options().keys())

|

||||

embedding_default = embedding_models_manager.get_default_name()

|

||||

embedding_choices = list(embedding_models_manager.options().keys())

|

||||

|

||||

return {

|

||||

"embedding": {

|

||||

|

||||

@@ -12,7 +12,7 @@ from typing import Optional

|

||||

import gradio as gr

|

||||

from ktem.components import filestorage_path

|

||||

from ktem.db.models import engine

|

||||

from ktem.embeddings.manager import embeddings

|

||||

from ktem.embeddings.manager import embedding_models_manager

|

||||

from llama_index.vector_stores import (

|

||||

FilterCondition,

|

||||

FilterOperator,

|

||||

@@ -225,7 +225,9 @@ class DocumentRetrievalPipeline(BaseFileIndexRetriever):

|

||||

if not user_settings["use_reranking"]:

|

||||

retriever.reranker = None # type: ignore

|

||||

|

||||

retriever.vector_retrieval.embedding = embeddings[index_settings["embedding"]]

|

||||

retriever.vector_retrieval.embedding = embedding_models_manager[

|

||||

index_settings.get("embedding", embedding_models_manager.get_default_name())

|

||||

]

|

||||

kwargs = {

|

||||

".top_k": int(user_settings["num_retrieval"]),

|

||||

".mmr": user_settings["mmr"],

|

||||

@@ -436,7 +438,9 @@ class IndexDocumentPipeline(BaseFileIndexIndexing):

|

||||

if chunk_overlap:

|

||||

obj.file_ingestor.text_splitter.chunk_overlap = chunk_overlap

|

||||

|

||||

obj.indexing_vector_pipeline.embedding = embeddings[index_settings["embedding"]]

|

||||

obj.indexing_vector_pipeline.embedding = embedding_models_manager[

|

||||

index_settings.get("embedding", embedding_models_manager.get_default_name())

|

||||

]

|

||||

|

||||

return obj

|

||||

|

||||

|

||||

@@ -58,6 +58,10 @@ class IndexManager:

|

||||

index_cls = import_dotted_string(index_type, safe=False)

|

||||

index = index_cls(app=self._app, id=entry.id, name=name, config=config)

|

||||

index.on_create()

|

||||

|

||||

# update the entry

|

||||

entry.config = index.config

|

||||

sess.commit()

|

||||

except Exception as e:

|

||||

sess.delete(entry)

|

||||

sess.commit()

|

||||

@@ -177,7 +181,7 @@ class IndexManager:

|

||||

self.load_index_types()

|

||||

|

||||

for index in settings.KH_INDICES:

|

||||

if not self.exists(index["id"]):

|

||||

if not self.exists(name=index["name"]):

|

||||

self.build_index(**index)

|

||||

|

||||

with Session(engine) as sess:

|

||||

|

||||

@@ -19,8 +19,10 @@ dependencies = [

|

||||

"python-decouple",

|

||||

"sqlalchemy",

|

||||

"sqlmodel",

|

||||

"fastembed",

|

||||

"tiktoken",

|

||||

"gradio>=4.26.0",

|

||||

"markdown",

|

||||

]

|

||||

readme = "README.md"

|

||||

license = { text = "MIT License" }

|

||||

|

||||

Reference in New Issue

Block a user